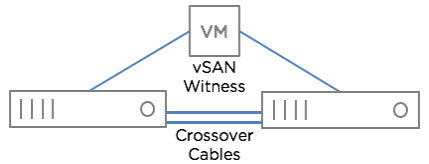

This installation is running a two node all flash vSAN Cluster with direct connected hosts with two 10GB connections. As you know a two-node vSAN cluster is supported with vSAN 6.0 and later. The vSAN replication network is running on the 10GB Network Interface Cards (NICs) and the vSAN Witness traffic is running on the 1GB NICs. By direct connecting the hosts, you avoid the cost of two 10GB switches which can save anywhere from $2000 to $20,000 depending on the 10GB switches you select.

The vSAN Cluster was running fine when we took down one of the nodes to install an additional four port NIC. As you know you should migrate all of the Virtual Machines (VMs) to the other node and place the node in maintenance mode before shutting it down. When we shut down the node, we just made sure that the vSAN storage was available before we placed the node in maintenance mode. When we tried to install the card, Dell sent the wrong NIC, because it was a half-height card and we only had full height slots available in the server. We brought the node up, but forgot to take the node out of maintenance mode. By default, if a node stays down for more than one hour, vSAN assumes that the node has permanently failed and is not coming back. If there are other nodes available, vSAN will attempt to replicate data to another node. In this case there weren't any additional nodes available because it was only a two-node cluster.

The node that was in maintenance mode was brought out of maintenance mode roughly 24 hours after it was initially shutdown. However, when the node was brought out of maintenance mode, it would not synchronize with the other node. We were using the dual port 10GB NICs for both vSAN Replication and vMotion Traffic. Both 10GB NICs were connected to the same vStandard Switch and the vSAN Replication Network and vMotion Network were separated by tagged Virtual Local Area Networks (vLANs). We could ping both servers on the vMotion Network. Both nodes could ping their local vSAN IP address on the vSAN Replication Network, but couldn't ping the other vSAN node on the same vSAN Replication Network. Everything was working fine before the cluster was taken down. No networking changes were made.

One at a time we tried removing a 10GB port from the vStandard Switch, but the problem still persisted with both 10GB ports. We tried swapping the cables and that didn't work. We opened up a support case with Dell since we purchased the vSAN Software from them and had to use their support instead of VMware. After roughly seven hours of troubleshooting, the Dell Support Engineer noticed that the firmware on both 10GB NICs were one revision behind the supported firmware. We updated the firmware on the node that was shutdown and then the nodes could see each other on the vSAN replication Network. We ended up separating the vMotion and vSAN Replication Network on two separate vStandard Switches to make it easier to troubleshoot any future issues. As soon as the nodes could see each other on the vSAN Replication Network they synchronized about 60GB of data in a few minutes. All flash and 10GB are really nice!

Bottom line, 10GB NICs are extremely sensitive to firmware versions. A few years ago we ran into a connectivity issue with firmware on another 10GB card. Whenever you use 10GB NICs check the VMWare Compatibility list and verify you're running on the correct firmware for the 10GB NICs. We didn't suspect the firmware on the NICs because everything was running fine before we shutdown the node. If you run into a similar issue, hopefully you'll read this article and it will save you a lot of time and frustration.

How to fix your vSAN ESXi Node that does not Synchronize after you Reboot it

Tags: Virtualization